Reality Modeling Language

Reality Modeling Language

To be able to create satisfying AR experiences, it is necessary to be able to adequately describe reality itself and not just the physical environment but any virtual layer on top of it so that it is possible to create interoperable interaction spaces (even if it is just reusing a tool of one experience as a makeshift stick to press an out of reach button in another). We aim to achieve this through the creation of a new Reality Modelling Language (RML), which enables the description of both real and virtual entities in a semantic, spatial environment.

1- Backwards compatibility with Existing Approaches

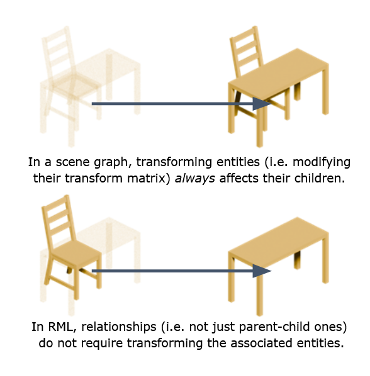

The current Scene Graph model is based on hierarchical structures where child nodes are spatially linked with their (single) parent, resulting in useful but often strange relationships (e.g. making a chair a child of a table).

For compatibility with current standards, RML can replicate their data structure, but it also allows relationships between entities that do not require transformations.

2- One Description, Multiple Representations

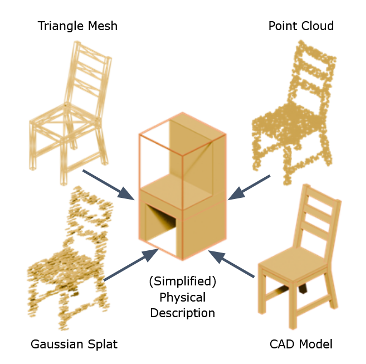

Instead of forcing a particular portrayal, entities might have multiple visual representations (triangle mesh, CAD model, point cloud, gaussian splat, etc).

To enable simulations, however they will have a common, simplified description (a basic spatial definition, with physical properties such as colour, hardness, conductivity, radioactivity…).

Furthermore, it might be a good idea to create material libraries, where the components of an entity store the physical properties are shared.

I3- Spatial Contexts/Metadata

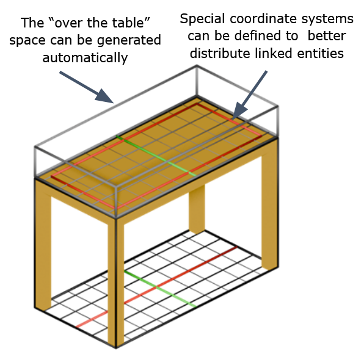

Every entity in the RML has its own “space”, but unlike Euclidean spaces commonly used in most 3D software tools, these spaces might have their own origin points, boundaries, and coordinate systems.

Furthermore, these spaces might have their own subspaces and automatically generate others.

In this way, it will be possible to create better distribution systems and perform complex spatial queries.

4- Extensible Semantic System

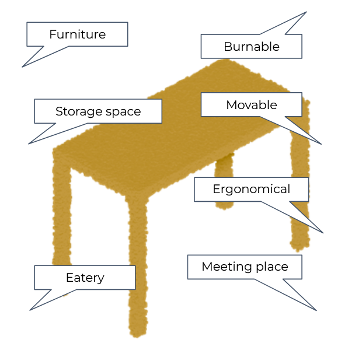

Every entity has semantic properties by itself, but these might be defined by the classes they belong to or the relations with other entities.

Instead of trying to model the entire Universe in one go, RML uses an extensible system that enables experts from different disciplines to build (and standardize) the model piece by piece.

This semantic information can be stored in a separate data structure (not unlike CSS in HTML).

As explained above, the RML is being refined through the creation of a developer sandbox where different conceptual models can be tested before being incorporated into the language itself. In this way, it is possible to validate concepts from existing standards (such as BIM-IFC, CityGML or IndoorGML), maximising the compatibility of RML with current tools and environments.

This approach has enabled us to further validate the language by creating prototypes for several use cases.

Spatial Navigation Prototype

The spatial computing system integrated into the very core of RML not only enables the advanced description of spaces (with complex shapes, geographic information, distribution systems, portal-based connections, etc.), but also allows the integration of semantic information to facilitate real-time pathing and navigation.

To test the viability of this system, we have created a prototype with a relatively complex scene, where four different types of agents (a human operator, a flying drone, a quadruped robot and a wheeled robot) must travel to a room situated in another building. Initially, the simulation environment enables all agents to reach their destinations, but the evaluator can introduce obstacles and hazards in some of the spaces, forcing the agents to reevaluate their intended path to –attempt to– overcome those traversal challenges.

The developer sandbox where this prototype is being built is available

(upon request) Link

Geospatial Data Visualisation Prototype

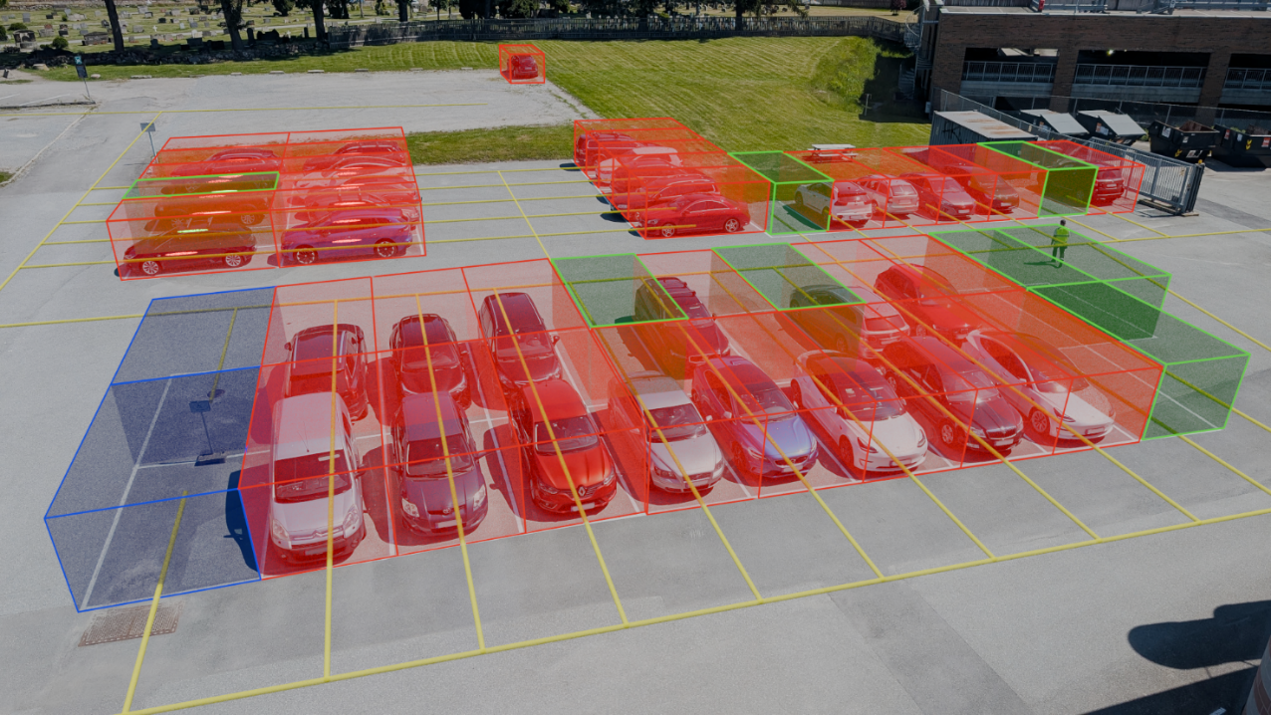

Unlike other 3D standards, geospatial formats such as CityGML or OpenStreetMap provide systems for semantically describing spaces. A good way to illustrate the value of this approach is by using it to solve a common problem in most urban environments: finding a free parking space.

In this prototype, we import data from the OpenStreetMap database to create an RML document and use a WebXR-enabled mobile browser to visualise the different types of parking spaces (including accessible spaces and charging stations) and guide drivers to the most appropriate one for their context. At the time of writing this report, the identification of free and occupied spaces is performed manually; however, we intend to utilise sensors to automate this task.