Open Source Visual Positioning Service

Open Source Visual Positioning System

Visual Positioning

For digital objects to appear at the exact physical location across users and over time, the scene needs to be recognised, and the user's AR device needs to be localised very precisely within the scene. This is usually done by capturing an image with the mobile camera, uploading that to a localisation service, which aligns the query image with a previously built point cloud map of the scene. Widely used methods for spatial mapping include structure from motion (SfM) and visual simultaneous localisation and mapping (vSLAM). The map point cloud contains the representative points of the environment (the “feature points”), which can be robustly recognised from multiple viewpoints. Such feature point maps can grow very large with the size of the represented scene, making the search in the map slow. Therefore, it is advantageous to split the scene into smaller maps and constrain the searched area based on some prior information. Such information can come from multiple sources, for example, a coarse GPS coordinate, currently seen WiFi SSIDs, Bluetooth beacons, 5G positioning, etc., and even from a previously successful localisation.

GeoPose and GeoPoseProtocol

The OGC GeoPose standard for describing the poses of objects

The OSCP and this project use the Open Geospatial Consortium GeoPose standard for exchanging the location and orientation of real or virtual geometric objects in a coordinate reference system. GeoPose expands the long-lat-alt coordinates with heading, tilt, and roll to describe the orientation of an object or device with six degrees of freedom (6DOF).

The OARC GeoPoseProtocol for interoperable visual positioning

Several VPSes already exist; however, they all use proprietary protocols between the server and the client, which limits their coverage to their own areas. A standardised API for visual localisation enables a client to switch between two or more services using the same API while on the move, allowing for true location-based discovery of information, regardless of the VPS used in the mapping. For this reason, the OARC has proposed the GeoPoseProtocol (GPP) for image-GeoPose communication between any VPS client and any VPS service. GPP is an application layer protocol for visual positioning using GeoPose. It standardises the VPS queries, including images and other sensor data, and VPS responses, encapsulating 6DoF camera pose in the world as a GeoPose description.

GitHub Link

Open Source Visual Positioning System developed by Nokia Bell Labs under the lead of Gábor Sörös, overview

The most significant development of this project is our own, end-to-end open-source visual positioning service, which can serve researchers and other interested parties to experiment with visual positioning in a universally accessible format.

There is a vast amount of academic research on the visual localisation problem, and it is still far from solved robustly. There is no time to develop a new method in the framework of this project. Instead, we take a well-known localisation framework from the academic community (called Hierarchical-Localisation) and make it available as a service. Even if the core algorithms already exist, considerable effort is still required to automate map creation and make the algorithms available as part of a Web service.

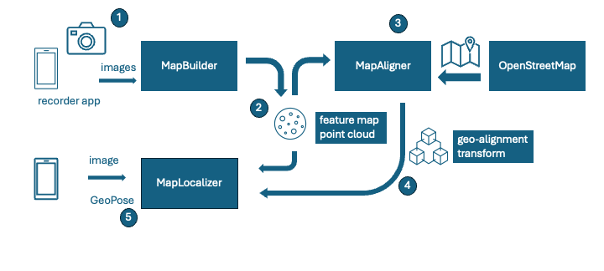

The main contribution of the OSCAR4US project is a simple but fully open-source visual positioning service, including tools and services for image capture, map creation, map geo-alignment, and localisation. Our system was developed by project participants at Nokia Bell Labs in Budapest. The system comprises the MapBuilder, MapAligner, and MapLocaliser components. The maps are built from smartphone images collected in a special sensing mode.

Overview of our open-source Visual Positioning Service

Image collection application for mapping

Visual positioning services, such as Augmented City or Immersal, typically provide proprietary image collection applications to aid in mapping. These mobile apps are closed source, and their capture formats are usually also closed. To support openness and interoperability, we originally planned to write our own image collector app, which, besides capturing images, also collects extra sensor data from the smartphone (gravity vector direction, relative motions between captured images, WiFi APs in the neighbourhood, etc.). In a previous NGI Atlantic project, we developed such an open-source app for Android

a and now we wanted to add an iOS app. However, we have found that the existing open-source StrayScanner

app can fulfil our needs, so we will utilise this app for image collection.

Map Builder

The image data collected using the StrayScanner app can be uploaded to our MapBuilder service, which we built from scratch. This service preprocesses, formats, rotates, scales, and sorts the images before mapping.

Mapping and localisation algorithms

The core mapping and localisation algorithms were not developed in this project; however, we utilised the Hierarchical Localisation (HLOC) framework

which is illustrated in the Figure below. This framework is widely used in visual localisation research benchmarking, and it already includes several state-of-the-art algorithms for mapping and localisation.

The map creation from images happens by jointly estimating the 3D points of the environment and the viewpoints (poses) of the cameras (images) that captured the environment. The traditional computer vision pipeline for reconstruction (also known as structure from motion, SfM) consists of the following steps: image pair selection, image feature extraction, feature matching, triangulation of points, and pose estimation of the cameras. The result is a 3D point cloud of representative corner points in the scene, along with all the camera parameters.

Both mapping and localisation can be sped up significantly if we can quickly find a set of images similar to the query and perform the costly feature extraction and matching steps only with that small subset of the map images. This is the main idea behind hierarchical localisation. Each image is first described by a single, so-called global feature vector, which is stored in a global index. Similar photos have vectors that are close to each other (in some abstract vector space), and dissimilar images are far apart. The processing pipeline is preceded by a retrieval step, in which similar images are found and only these are passed to the subsequent steps. Then, feature points are extracted, and the photos are matched with others that cover the same part of the scene. The 3D structure of the scene and the camera views are jointly optimised.

We have created a Web frontend from scratch for uploading new image sets for mapping and tracking the status of processing pipelines. The processing backend performs various image manipulation tasks and wraps the HLOC mapping framework. There are several different feature point types, filtering, and matching strategies that can be implemented and configured in HLOC. Our MapBuilder configures a pipeline and runs it to oversee the map creation. By utilising this framework, we can evaluate a substantial number of existing algorithms. With every new release, new algorithms are still being added to this framework, making the latest methods easily applicable in our service.

: MapBuilder Web Gui, iPhone can directly upload a zipped StryScanner recording, automatic extraction, image formatting, and image selection. Different datasets (maps) are in different rows

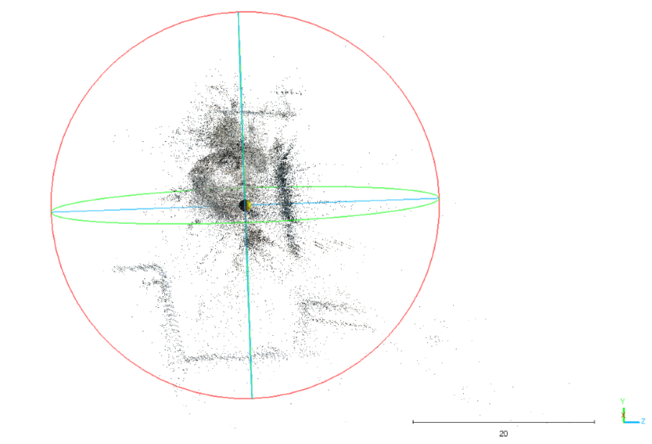

At the end of the mapping, the feature map can be exported in PLY 3D point cloud format for visualisation. A feature map of the square in front of Nokia Budapest is shown in the figure below. The point cloud is sparse because it contains only the most unique features that represent the environment. As mentioned before, the HLOC framework supports various feature types, making our service flexible for future extension with new feature types.

OpenVPS Map 3D point cloud of the square in front of the Nokia Budapest office

Map Aligner

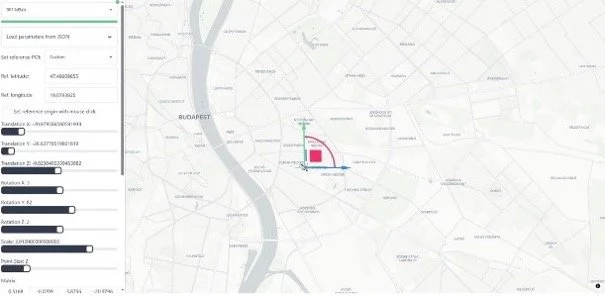

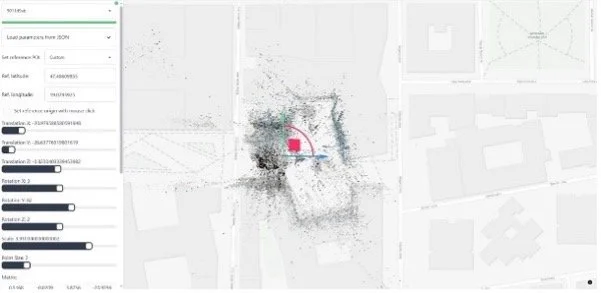

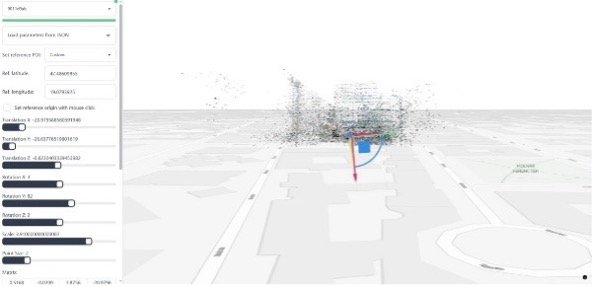

The point cloud map alone has little value unless it is aligned with the world. For this task, we created a Web-based GUI from scratch, which can load OpenStreetMap building footprints as a background, load the PLY point cloud, and allow the user to align the point cloud with the building shapes manually.

The MapAligner interface requires a reference point expressed in geographic coordinates, which it then calculates a related transform matrix based on the alignment of the point cloud. Every variable (translation, rotation, scale, and geo reference) is adjustable not just with the gizmo but with more precise sliders and input fields.

The process of manually aligning the point cloud map with the world using our MapAligner

Map Localiser

This is a third new Web service that can load a map along with its alignment transform, and implements the GeoPoseProtocol REST API. Client applications can send a query image, and our service returns the estimated GeoPose in response.

While we had initially planned for this component to be fully functional by the second milestone, unforeseen difficulties arose due to the need for significant refactoring in HLOC. The HLOC framework was initially designed for offline benchmarking the localisation of a large number of images. To perform live localisation on a query image, we had to significantly reorganise the HLOC code, which took a considerable amount of time and was unplanned. This component became fully functional only in Phase 3, which left little time for some of the planned discovery features.

An end-user device sends a query image (or images, and potentially other sensor input) to the MapLocaliser, which passes it to the core localisation method(s). The query 2D visual features are matched with the 3D visual features in the spatial map to estimate the six degrees of freedom pose of the camera in the 3D map. Then, through our geo-registration transform, this pose is indirectly mapped to the world. We spent a considerable amount of time debugging the alignment transforms and the conversion to ENU, as GeoPose requires (camera looking east in identity orientation).

Authentication and deployment

As 3D maps contain sensitive data and must be protected, we integrated authentication into OpenVPS, which was not originally planned and required a significant amount of time. Initially, we implemented KeyCloak-based authentication, but it proved too complex due to its cloud components, so we replaced it with FusionAuth-based authentication, which can run locally on the host machine. There is currently only one shared user, which is enough for demo purposes, and user group management could be added in the future.

We have integrated the components MapBuilder, MapAligner, and MapLocaliser via docker containers and made the system easily deployable via docker compose files. The service is currently running on a server in the Nokia network, and later we plan to deploy it on AWS.

Interoperability between visual positioning services

Besides our newly developed OpenVPS, the OSCAR4US project team is also collaborating with two commercial VPS providers (Augmented City, Italy, and Immersal, Finland) who agreed to wrap their proprietary solutions with the OARC GeoPoseProtocol API, enabling them to be used interchangeably in AR experiences and establishing interoperability between two proprietary VPS providers.

Augmented City Visual Positioning System

The Augmented City VPS technology, developed and based in Italy, is primarily for outdoor localisation, on which, for example, touristic services can be built.

Within this project, Augmented City developers implemented the GPPv2 API, which was successfully tested to work well with the OSCP WebXR client (SpARcl). The new code has been deployed to the production servers and is now available for use by anyone.

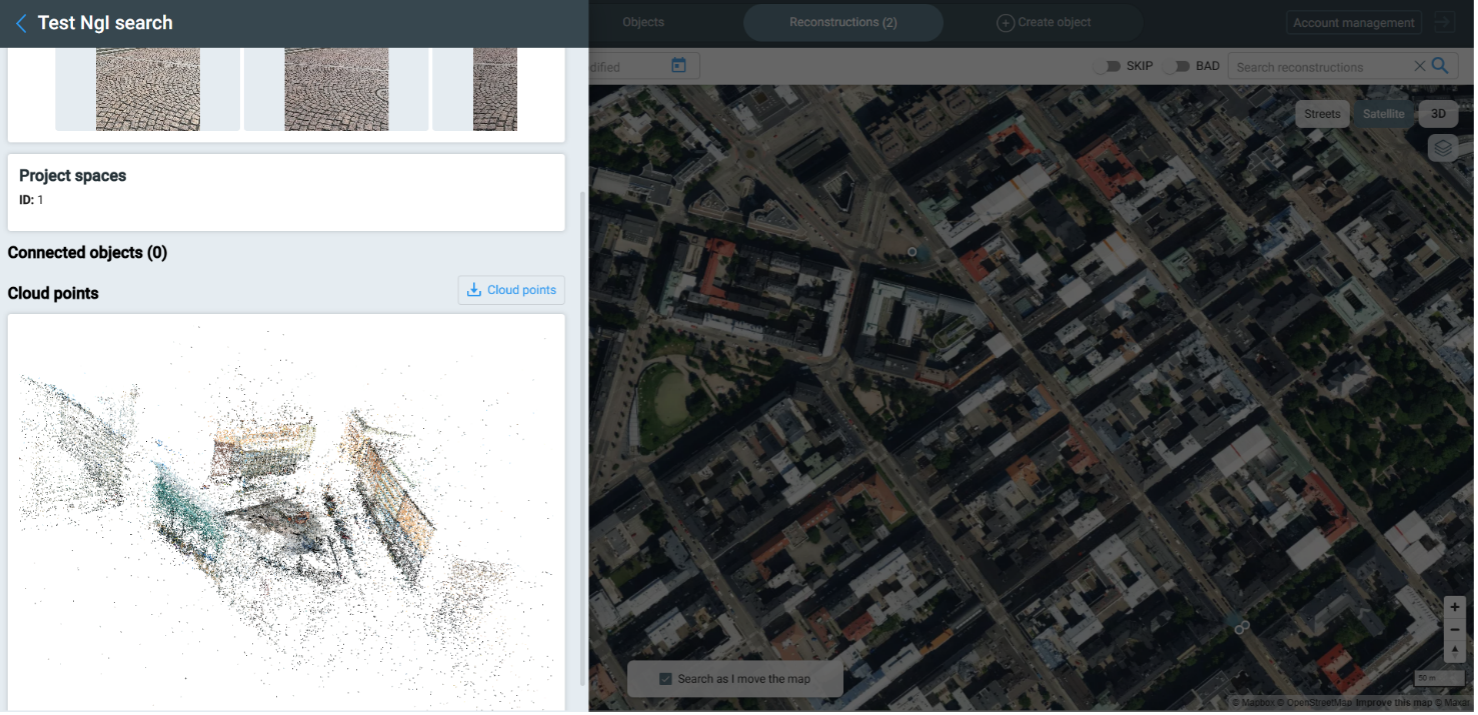

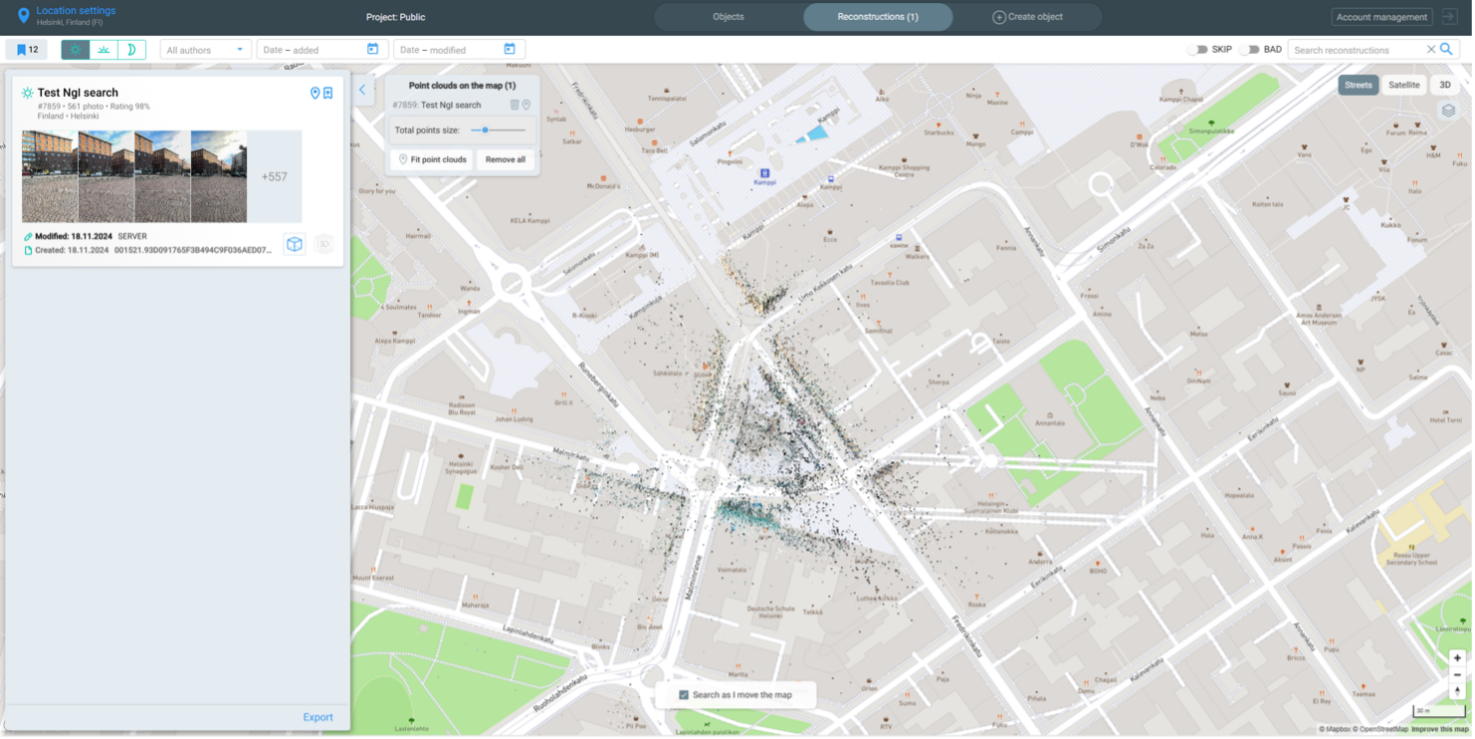

We have mapped the target areas of Helsinki and Budapest with the proprietary Augmented City Scanner app. Their cloud service reconstructed the point cloud map of the region, which we manually geo-aligned using their Web interface shown above. The localisation using the proprietary AC protocol, as well as the old GeoPoseProtocol v1 worked well.

Immersal Visual Positioning System

The Immersal VPS technology from Finland is suitable for both indoor and outdoor localisation with applications ranging from indoor navigation, games, sports and entertainment events to industrial use cases.

Immersal first introduced a GeoPose localisation endpoint in their public REST API in 2021. Initially, the endpoint used a proprietary format for encoding location and orientation based on early drafts of the OSCP GeoPoseProtocol v1. Immersal has now transitioned the endpoint to full compliance with the GeoPoseProtocol v2. The prototype implementation is currently running on a test server (http://13.51.161.245/geoposeoscp). Still, it has not been released in a product-ready format for encoding location and orientation based on early drafts of the OSCP GeoPoseProtocol v1.

The proprietary Immersal VPS client requires knowledge of the ID(s) of the map(s) to be searched. As this information is not available to our generic (universal) VPS client, Immersal must rely on a coarse location estimate (for example, via GPS) that the client submits in the VPS request. The map selection happens as follows: all Immersal maps are geohashed/tagged upon creation (if they were mapped with GPS on), so one can query by lat/lon and a search radius (this is a parameter of Immersal’s protocol, but it is not in OSCP GPPv2, so the wrapper now uses the default value of 200 meters). All the maps must also be public (because Immersal-specific user tokens were also removed).

The new Immersal GeoPose service can be tested with public maps:

1. Start by creating an Immersal account on the developer server's Developer Portal at http://13.51.161.245. It's using a debug database, so our production accounts (if you have created one in the past) won't necessarily work here.

2. Use the Immersal Mapper app from the App/Play Store to create a map. However, when you start the app, select "Custom Deployment" instead of "International Server" on the start screen, and enter the IP address mentioned above (see screenshot). Save the map, upload it to the cloud for map construction.

3. After the map has been constructed, change its privacy to 'Public' in the Dev Portal (see the other screenshot).

The point cloud semi-automatically aligns with the world coordinate frame; however, manual adjustments may be necessary. After that, localisation can be performed by posting a GeoPoseRequest to the http://13.51.161.245/geoposeoscp endpoint. The Immersal service registers the latest photo to the point cloud and returns its GeoPose in global coordinates.

Demonstrator

We integrated our Open VPS and our PoI Search with the service and content discovery mechanisms of the Open Spatial Computing Platform. We demonstrated all elements of the above contributions. We showcase in the Figure below the geo-localisation of users via our open VPS, the discovery of local PoIs, the creation of new objects by clicking on the ground, as well as multi-user interaction. There is no application installation needed; our demo runs in an Android Chrome browser on any WebAR-capable device. The demo can be set up both indoors and outdoors, and the experience can be quickly deployed at a new location.

Another contribution to this project is to enable the sharing of users' GeoPoses (see video below) and spawned digital objects through a publish-subscribe message broker. Once geo-localised at least once and tracking their egomotion, the poses of users (and any dynamic objects) can be expressed as GeoPoses. We also enable spawning new content by clicking on the ground via raycasting in the AR session and calculating the GeoPose of the hitpoint. Such a geo-location cursor can have many interesting future applications. Connecting non-AR viewers (like the Cesium globe view or the traditional map view on the right of the figure) and authoring tools also requires unified handling of locations; hence, there is a need for such an interoperable way of sharing locations, AR objects, and actor poses for true multi-agent and multi-vendor AR experiences